Real time clustering and filtering with RTMAC and RTEFC

This page examines clustering and filtering of data in real time, a form of multivariate time series analysis. It is a part of

BDAC - Big Data Approximating Control

Clustering for analyzing real time data online

Organizing and using real time data poses many challenges. Clustering is one approach to organizing and analyzing data. Clustering is simply a grouping of data points (“cases”) considered similar, usually based on a distance measure. Please see the section on clustering basics.

But traditional clustering techniques did not address real time data, for use online. Filtering is usually needed to reduce noise. Clustering for real time should compress the massive and ever-growing quantity of data rather than storing all raw data, update incrementally with new data, recognize operating condition changes, remember unusual conditions even after long time periods, and further filter noise by averaging similar conditions at different times. Real time clustering should also systematically adapt to equipment degradation/renewal over time when desired. For “hard real time” applications (those needing not just high performance, but in particular guaranteed worst case execution time), the methods should ideally be both efficient and non-iterative within one online processing time step. Knowledge of causality should be exploited to further filter out the effects of unmeasured disturbances and process noise. The methods must not require cluster labeling by humans to operate in applications such as industrial plant control. That is, practical methods must be “unsupervised learning”, requiring no human intervention. One the other hand, for some applications such as fault detection and isolation, any clustering implementation should have the optional capability of accepting human-entered cluster labels. Then, in the future it can generate usable messages such as alerts when operations enter a cluster corresponding to a fault.

The focus of this section is on analyzing data collected over time. Data sources include sensors that monitor a system; values of actuators (control system outputs); statuses or other symbolic variables that can be mapped to binary or integer values; or other calculated or sampled signals. For real-time systems, the sampling never stops, and new analysis may be needed immediately at each time step while online. This data is time series data. The time series are multivariate because almost all systems of interest have multiple variables. Even a single-input single-output control system has time series for the input and output values.

Dynamic systems can be addressed by incorporating time trajectories of all variables within a sliding time window, into a single vector at each time step. This is explained in more detail in the section on BDAC data representation.

RTEFC ("Real Time Exponential Filter Clustering") and RTMAC (“Real Time Moving Average Clustering”) are efficient and easily-implemented new real time clustering algorithms that address real-time issues when prototype clustering is applicable. These algorithms create and update clustered data sets for data-driven online applications, useful for either building models or for direct pattern matching without models, as needed for fault detection and isolation, or in BDAC.

These algorithms can be used for static systems or dynamic systems. They also can be used whether or not the systems are time-invariant, unlike traditional K-means. A system is time-invariant if it’s behavior is the same for a given set of inputs at any time. A static system, for example, might otherwise be represented as a set of algebraic equations. If all parameters in those equations remain fixed over time, the system is time-invariant. The state of system changes over time because of changes in inputs, but the model parameters don’t change. And measurements of the system vary because of noise. If some parameters in the algebraic model can change over time, the system is time-varying. Dynamic systems include those that might otherwise be modeled by differential equations, or the equivalent versions written in discrete time form. Again, these can be time-invariant or not, depending on whether or not all parameters remain constant over time. A time invariant dynamic system behaves the same for a given set of historical inputs at any time. For systems that are not time-invariant, behavior changes over time, and model-based systems require periodic updates to adapt to new model parameters.

The system does not need to have a mathematical model - it just needs to have associated time series data. So, for instance, traffic speed data for road segments over time can be represented and used without attempting to build a model at all.

The clustering methods here were developed as a way to create and maintain an online data set for use in the model-free BDAC approach to control, introduced in the paper Big Data Approximating Control (BDAC) -- A new model-free estimation and control paradigm based on pattern matching and approximation . BDAC uses the centroids as an efficient way to represent possible system behavior, as a way to filter out the effects of noise and umeasured disturbances, and also track process changes. However, models developed for process control methods like MPC (Model Predictive Control) or other applications are also subject to the same issues of noise, unmeasured disturbances, and selection of the best data for use in modeling to incorporate unusual conditions as well as normal conditions. These new clustering techniques are applicable for pre-treating the data for model identification, as well as preparing and maintaining a data set for subsequent neural network training. More generally,they should be suitable for data-driven real-time applications that can use prototype clustering, where centroids are the main interest.

Limitations of K-means clustering for real time use

K-means clustering is still popular because of its simplicity. But K-means has major drawbacks for real time use, where new data is always arriving and the process behavior may change over time. The “best” K is unknown, and can grow in many applications. It may also be difficult to choose an initial set of centroids, and the results are sensitive to this initial choice. Following implementation in many process control applications, new operating modes may occur, and the process changes over time due to feed or product changes, equipment degradation and renewal, and so on. A related problem is that the core algorithm doesn’t specify how to recognize and add new clusters. Another problem is that each new case causes an unpredictable number of iterations. This can be a problem in “hard real time” systems where computation must be guaranteed to complete in a fixed time. But the biggest problem is much more basic: there is no mechanism to forget old data. The data set will continue to grow forever, impacting memory usage and computing time. Furthermore, some of that data will become outdated, and should not be weighted equally with more current data. So the “forgetting” issue encompases both physical memory use and the impact of outdated data that may reflect only older system behavior.

Real time clustering with RTMAC (Real Time Moving Average Clustering)

RTMAC (Real Time Moving Average Clustering), sometimes shortened just MAC, is a variation on K-Means clustering. In K-means, set a limit nMax on the maximum number of cases per cluster. Then, with each new case:

- Find the cluster with the closest centroid

- Include the new case in that cluster

- If that cluster already has the maximum size nMax, then delete the oldest member of that cluster

- Calculate new centroid values and optionally iterate as in K-means

A variation on this algorithm is to specify a maximum cluster radius so that new clusters can be started when needed to accomodate newly discovered system behavior. This also allows the clustering to start with no initial cases, adding new cluster centers as needed. (A parameter nClustersMax to limit the total number of clusters is also useful.) This approach is demonstrated in the section on RTEFC below.

RTMAC is analogous to moving average filters of length nMax time steps in time series analysis. The initial new centroid immediately after adding a new case is in effect the output of that cluster’s moving average filters: the newest case is added, the oldest case is dropped, and the moving average for each dimension is recomputed. For each new case, only one centroid is updated (before the K-means iteration). This suggests that other filters from time series analysis should be considered, especially those requiring less computational effort and data storage. This leads to the development of RTEFC, which also eliminates the K-means iteration.

Real time clustering with RTEFC (Real Time Exponential Filter Clustering)

RTEFC (Real Time Exponential Filter Clustering), sometimes shortened to just EFC, replaces the moving average calculation with an exponential filter. This is applicable in the important special case where we care about using the cluster centers (centroids), rather than the original data. For instance, in BDAC, the centroids are used as approximate representatives of feasible system trajectories.

The cluster centers (centroids) are stored as the rows {si} of a matrix S. We define a new case snew as “novel” if the closest distance d to every existing case exceeds a threshold radius, so that dclose = || snew - sclose || , where sclose is the closest case to snew .

The RTEFC algorithm starts with an empty matrix S. At each time step, given a new case snew,

- Calculate distances from snew to rows of S, finding closest match sclose and its distance dclose

- If snew is novel and dim(S) < nCasesMax

- then add snew to S

- else update sclose to asclose + (1-a)snew (exponential filtering)

where dim(S) is the number of rows of S (number of cases) and a is the exponential filter constant. The parameter nCasesMax is a limit on the total number of cases allowed (which is the same as the number of clusters). Optionally, we remove linearly dependent cases for subsequent use of centroids with linear methods.

RTEFC operation is shown in the following diagram. (Click for full sized image.)

Understanding the filtering behavior in RTEFC and RTMAC

The filtered output s[k] of a (vector) exponential filter over time steps k is

s[k] = a s[k-1] + (1-a) snew[k]

For a fixed time step T between samples, this filter is equivalent to a first order lag with time constant  : :

a = exp(-T/ ) or equivalently ) or equivalently  = -T/log(a) = -T/log(a)

The time constant  is the time it takes to achieve 63.21% of the final value in response to a step change in the input. 98.17% of the response is complete in 4 is the time it takes to achieve 63.21% of the final value in response to a step change in the input. 98.17% of the response is complete in 4 . For example, for a sample time of T = 1 minute, a = 0.9 corresponds to . For example, for a sample time of T = 1 minute, a = 0.9 corresponds to  = 9.49 minutes. For a = 0.998, the corresponding = 9.49 minutes. For a = 0.998, the corresponding  = 499.5 minutes. The step response of a single-variable exponential filter follows (click for a full-sized image) : = 499.5 minutes. The step response of a single-variable exponential filter follows (click for a full-sized image) :

But RTEFC is not just simple exponential filtering. One obvious difference is that RTEFC is multivariable (although it shares a single filter parameter a for n filters for an entire vector of n values). But more significantly, at each time step, only one cluster is updated (or created). The rest of the clusters remain unchanged. This makes RTEFC efficient, and also eliminates the unpredictable amounts of iteration found in K-means clustering. This approach also allows unusual operating conditions to be remembered, because clusters representing unusual conditions are not updated during more normal operations. This contrasts with other techniques such as recursive least squares approaches to building models. Those recursive approaches uniformly forget all old data exponentially. For typical process control applications, there are nonlinearities and a variety of different operating conditions, so simple recursive approaches are poorly suited compared to clustering approaches that can remember the unusual conditions.

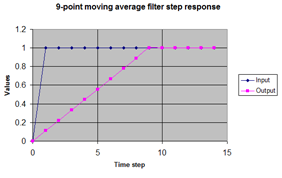

For moving average filtering, the plot is somewhat different. The response is a straight line, and the time to 100% completion of the response is the length of the averaging period. (Click for a full-sized image) For moving average filtering, the plot is somewhat different. The response is a straight line, and the time to 100% completion of the response is the length of the averaging period. (Click for a full-sized image)

When addressing noise reduction, there are several types of noise or errors to consider. Sources of noise are discussed in the noise section of the Guide to Fault Detection and Diagnosis. Routine, high frequency “normal” noise, is often modeled as gaussian (normally distributed). Exponential or moving average filtering, along with other low-pass filters, are effective at reducing the effects of this noise. However, they also slow down response to true changes in their input. There is a tradeoff between noise reduction and response time lags. RTEFC and RTMAC are effective at reducing the effects of high frequency noise just from their filtering behavior. But they are more effective because of the additional “case filtering” that occurs when similar cases at different times are combined. Errors are canceled against each other not just from recent history as in normal filtering, but also from occurrences widely separated in time. The noise cancellation from case filtering is effective for high or low frequencies.

“Spikes” are another type of noise: drastic short term changes. Often these should be ignored as meaningless transients. Exponential and moving average filters help reduce the effect somewhat because of averaging against other values, but address this only partly. In traditional filtering, “spike filters” separately handle this type of noise. Similarly, RTEFC and RTMAC provide only some protection against spikes. Some other real-time clustering methods address this through the addition of “temporary clusters”, loosely analogous to spike filtering. Future enhanced versions of RTEFC and RTMAC will include some similar protection.

So, are RTMAC and RTEFC examples of filtering or clustering? Yes!

This filter behavior isn’t just about noise reduction. It’s also about forgetting old data for adaptation when desired

The filtering character of RTEFC and RTMAC really serves two purposes. One is noise reduction, and the other is a means to forget old data when new data should override it. First, RTEFC and RTMAC limit data storage, unlike K-means. But, in addition to the physical memory issue, K-means does not forget the effects of old data. Old data is never forgotten. The effects of every data point in K-means are weighted equally forever. A related problem is that after running a long time, new data has essentially no effect on the centroids -- each data point has a weight of 1/k in the average, where k is the number of time steps. These are problems when dealing with systems that change over time.

The ideal system will gradually forget old data when it is replaced by newer data at about the same conditions. It will also remember the old data at unusual conditions because there is no new data near those conditions most of the time. RTEFC and RTMAC accomplish this. Exponential decay of the effect of old data as is done in RTEFC is the typical approach to forgetting. this exponential forgetting happens automatically in algorithms such as Kalman filtering (with process noise), RLS (Recursive Least Squares), and others. But in those approaches, all data is forgotten exponentially, whether it is unusual or not. Clustering is needed to preserve the unusual conditions. RTEFC and RTMAC retain the old unusual data, except in one case: when the number of clusters reaches nCasesMax. Then, if new data happens to be nearest to the unusual cluster, it can pull the centroid away from the unusual conditions. That is called “forced filtering”. This behavior is unusual in RTEFC if nCasesMax is large, in contrast with basic K-means, where every new entry is “forced” because of the fixed number of centroids.

Adaptation is sometimes good, sometimes bad

If the data already contain all operating modes and a full range of conditions, and the process doesn’t change, then you don’t need adaptation. It’s time invariant. Any adaptation that occurs would reflect problems in sensors, equipment, or operation. That should be detected without adapting to the faulty operation and absorbing into a new “normal” case. This time invariance of the process may be a reasonable assumption for embedded systems in manufactured products like airplanes or refrigerators, given experimental data as well as simulations. But it’s not a good assumption for operating plants like refineries or chemical plants. After installation of a monitoring, decision support, or control system, it may take years before some operating modes, plant goals, feed characteristics, product specs, process redesign, or other changes occur. And there are constant cycles of degradation and periodic renewal and eventual equipment replacement. For instance: catalyst decay, periodic regeneration with less and less effect over time, and eventual replacement with new catalyst (and even new catalyst type that may have different characteristics). Heat exchangers and some distillation tower trays get fouled, leading to reduced heat transfer or flow, and may eventually get cleaned. As an example of the importance of this in a different application, the dominant advanced control approach in the process industries, called MPC (Model Predictive Control), finds it essential to include fudge factors and slack variables to accommodate the mismatch between the model and the real world. (In effect, MPC controls the model output, which is never actually right).

If the applications include dynamics instead of just steady state values, the problem gets worse. Every time a controller mode changes from values such as manual, automatic, cascade, or a computer mode with an advanced control scheme, the behavior of the the affected variables changes completely. For instance, for a temperature controller that manipulates steam flow in manual mode, the steam flow will be held constant while a temperature changes due to disturbances. But in automatic control mode, the temperature should stay close to its target and the steam flow should vary to account for disturbances. This issue actually affects the possible steady state values seen by the monitoring system as well. Some conditions away from controller setpoints will only be seen when controllers are in manual, which might not occur for months or years. If the controller is badly tuned with a high gain, there may be large cyclic excursions in both temperature and steam flow. If the controller is badly tuned with a low gain, the temperature may drift significantly when a disturbance occurs. There are thousands of controllers in a typical plant, and any of them might be retuned from time to time, including bad tuning by inexperienced personnel.

An example of RTEFC building training cases online for data-driven applications

The following plot shows an example of RTEFC operation online over time. The vertical axis shows the identity of the closest cluster number to the new case at each time step. The cluster number ids start at 0. The blue dots identify the closest case, while the green dots across the bottom of the plot highlight when a novel case is found (so that a new cluster is started), and some other special conditions. This particular application (BDAC) has individual cases representing entire trajectories of multiple variables over a time window. It ignores some data for reasons such as being at steady state, or not having run long enough to collect data for an entire time window. In those cases, the cluster id is shown as less than zero. Those application specific details of BDAC don’t matter for just discussing RTEFC. (Click on the plot for a full-sized image)

Once the first cluster (numbered 0) is started, the next few cases are close enough that they are absorbed in that first cluster. After several time periods, eventually a new case is different enough that a new cluster is started. Several subsequent cases are absorbed into that cluster. This continues for a while, but then due to some cyclic behavior, cases start to repeat around time 300. Those cases are absorbed into earlier clusters. Around time 700, a different set of operating conditions leads to the creation of new clusters. Over time, fewer and fewer new clusters are formed. Instead, the effects of noise and unmeasured variables are filtered out. Once the first cluster (numbered 0) is started, the next few cases are close enough that they are absorbed in that first cluster. After several time periods, eventually a new case is different enough that a new cluster is started. Several subsequent cases are absorbed into that cluster. This continues for a while, but then due to some cyclic behavior, cases start to repeat around time 300. Those cases are absorbed into earlier clusters. Around time 700, a different set of operating conditions leads to the creation of new clusters. Over time, fewer and fewer new clusters are formed. Instead, the effects of noise and unmeasured variables are filtered out.

“Causal filtering”: Clustering and filtering for causal systems

For causal systems, process input changes subsequently cause process output changes. Analyzing the relationship between the inputs and outputs is a key step in numerous fields, including process control. Ideally, for a given set of process inputs (including history in the case of dynamic processes), the outputs would be the same. However, that is not the case because of

- Noise or errors in input and output measurements

- Unmeasured disturbances

- Process noise

- Process changes over time

We often want to cluster just based on process inputs, to filter out the effects of noise and unmeasured disturbances, and also to adapt to process changes over time. The solution is to set the weights of the process outputs to 0 in any norm or distance calculation. This simple change has a large impact. When similar input conditions arise from time to time, the effects of the unmeasured disturbances, process noise, and sensor noise on the process output variables average out closer to zero. Then, what is left is more noise-free output behavior that can be predicted based on known inputs.

Supervised vs. unsupervised learning for use in classification

All of the clustering techniques discussed above develop clusters without human intervention: they are “unsupervised” and “autonomous”. RTEFC and RTMAC generate new clusters when they are discovered, while K-means is more likely to absorb them into the arbitrarily chosen initial clusters. Each cluster can be automatically labeled by default as “cluster 1”, “cluster 2”, and so on. For any new observation, a classification task answers the question “what class is this in?” A class is a set of conditions that might correspond to a particular operating mode or a fault, for instance. A class includes one or more clusters. One approach to classification is to pick the cluster with the closest centroid, assuming that centroid has been assigned to a class.

Clustering can be converted to at least partly supervised form by having humans optionally label at least some the clusters when possible. For instance, for a fault detection application, a person adds a label “abnormal” to at least the clusters for conditions considered abnormal. For a full diagnosis (fault isolation), a more specific name such as “loss of power to unit 3” is needed. From a user interface standpoint, for online monitoring applications, the ability to add class labels to at least some clusters, especially the ones associated with faults, is important. Then a useful conclusion can be presented to the users. “Alert -- You’re in cluster 18” won’t be at all helpful to a plant operator, and it may require a lot of engineering time to even help an engineer. Unfortunately, the usual problem will be finding someone to actually enter that information, since the labeling beyond some numbered default requires human intervention.

Adaptation complicates all this. The issues involved in simultaneous online learning and classification & fault detection are challenging. Since some plant operating conditions might not be encountered until years after installation of the monitoring system, when a novel data point is encountered, is it: (1) an outlier to be ignored, (2) a new normal condition to be remembered, or (3) a new defined fault to be remembered. It’s not always going to be clear. When fault detection or outlier detection is part of the overall application beyond the clustering, it is best to detect the problem soon, then stop creating and updating a new cluster unless the fault should be remembered in a new cluster.

Other approaches to real time clustering

Micro-clustering

One general approach to extending traditional clustering into real time is to split the processing into online and offline phases. The online portion computes “micro clusters”, which accumulate summary statistics for cases that are already close to each other. New cases are added to a nearby micro cluster. Then, periodically, an offline clustering technique clusters the micro clusters. There is special handling for anomaly detection, so that new observations distant from existing ones are added to temporary micro clusters, whose ultimate fate is decided after multiple additional samples. Otherwise the online portion could resemble the “forced filtering” case in RTEFC/RTMAC or basic K-means, which would suppress discovery of new clusters. Some methods, such as DenStream maintain a weight that is an exponential function of age, analogous to RTEFC behavior. The method only add a cluster for offline use when the age exceeds a threshold. This suppresses short term outliers. Older clusters are eventually removed. DBstream also is a micro-clustering approach with age-weighted micro-clusters, but anomaly detection is done in the offline phase. There are also hierarchical clustering techniques such as Clustree that use micro clusters.

The general idea of temporary clustering until outliers are rejected may be added to future versions of RTEFC/RTMAC, but without the offline phase, and still ensuring that significant unusual cases are maintained.

Mini-batching

With mini-batching, multiple new cases are clustered in batches. For instance, there is a mini-batch version of K-means that carries over centroids from the previous batch. This reduces convergence time for the unpredictable iteration in K-means, but doesn’t address discovery of new clusters.

Recursive Density Estimation (RDE)

There are other algorithms for real time clustering that take the approach of storing minimal amounts of information rather than all the raw data, eliminating the offline processing, and performing incremental updates at each time step, as in RTEFC and RTMAC. For fault detection and isolation in particular, an approach called Recursive Density Estimation (RDE) is described in Fully unsupervised fault detection and identification based on recursive density estimation and self-evolving cloud-based classifier, in 2014 by Costa, Angelov, and Guedes, and A new unsupervised approach to fault detection and identification, by the same authors in 2014. Instead of carrying over just the mean of each cluster from one time step to the next, RDE also carries over a scaling factor to indicate the spread of the data, loosely playing the role of a cluster radius or variance. In effect, the cluster radius is variable, not fixed, although there is a parameter called “zone of influence” that does need to be chosen that plays a similar role. Incremental updates are performed based on an assumption that the data are distributed following a Cauchy probability density function, rather than some other typical approaches such as Gaussian (normal) distributions. Assignment of new cases to clusters, and classification, is through a much more complex fuzzy rule-based approach than RTEFC/RTMAC. Handling of outlier data before committing to new clusters is carefully handled, using an approach akin to the temporary clusters of the micro cluster approach, combined with anomaly detection by sequential analysis vaguely reminiscent of a Sequential Probability Ratio Test (SPRT) or CUSUM to distinguish outliers from genuine new cases.

The basic RDE methodology did not have a mechanism for forgetting the effects of old data, so that, after a long run, cluster means and scale factors mostly ignored new data as in K-means. This was addressed in 2015 in RDE with Forgetting: An approximate solution for large values of k with an application to fault detection problems, by Bezerra, Costa, Guedes, and Angelov. RDE with forgetting included exponential forgetting, with an exponential filter equation and factor for the mean and scale factor, analogous to RTEFC.

Taking RDE as an example of minimizing the amount of data stored, RTEFC could be considered an even more extreme example of that philosophy, storing only the mean, and keeping all computation extremely simple.

Copyright 2017-2020, Greg Stanley

(Return to BDAC - Big Data Approximating Control )

|